The web is an open place where all sorts of broken client implementations can roam free. In this article, I’ll walk you through how you can help out clients (mostly feed readers and bots) that don’t know that URL #fragments should be dropped from request target addresses.

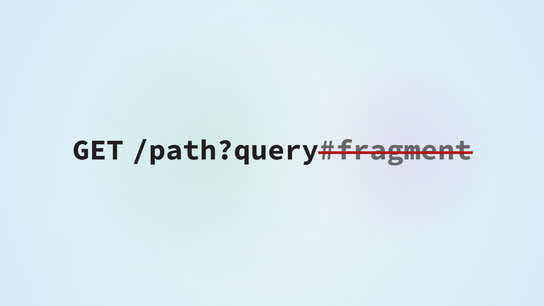

The fragment part of an URL, that’s everything after the first # character, should be omitted when forming a valid request target in HTTP. The request target is the page address that a HTTP client requests from a web server. However, going through my server logs I found a noticeable number of syndication feed readers and bots that have either percent-encoded the fragment portion of URLs or even sent it as-is in their GET requests.

These requests end up with 404 Not Found error as an HTTP server will interpret the fragment to be part of the URL’s path. It’s not Hurricane Katrina, but it’s still a problem. I’ve sent some patches around to fix some of the broken implementations, but I can’t possibly fix all of them. You can still recover traffic from clients and bots that make this mistake using some redirect magic.

The following Apache web server configuration example finds the first literal or URL-escaped # character, drops it and anything after it from the URL, and finally redirects the client to the corrected address with the fragment removed.

RewriteEngine On

RewriteCond %{REQUEST_URI} "(.*?)(#|\%23)"

RewriteRule "(.*)" "%1" [last,redirect=302]Notably, this redirect entirely discards the fragment portion from the URL. By triggering this rewrite rule, a client has already demonstrated that its incapable of properly handling URL fragments.

I’ve had no luck constructing a similar redirect rule for Nginx. It seems Nginx, understandably, refuses to believe a client would ever submit the fragment portion of an URL as part of a request and just refuse to match against it.

Working around the problem using a different URL pattern

Broken clients will often remain broken and new broken clients come in to the mix all the time. There will always be buggy clients. However, you can mitigate this somewhat for links on your website that contains fragments.

By changing the fragment to appear after the query string or the last query value group in your links, most web servers should be able to normalize incoming request without the need for any special configuration or redirects. These examples should make this method a bit clearer:

https://www.example.com/path?#fragment

https://www.example.com/path?query&#fragmentIf these request came in to the server from a HTTP compliant client, they’d contain an empty query string or an unrecognized query value group. If there request where to come from a broken client, however, the path portion of the URL wouldn’t be corrupted by the tagged-on fragment portion. They’d instead contain non-nonsensical query strings or an unrecognized query value group. The point being, that most web servers already know how to deal with unexpected query strings – so the URLs should remain functional.

The issue with this approach is that most clients following such a link from, e.g. /path would have to issue another request to /path?. Some clients would normalize an empty query string and not issue a second request, however, this isn’t standard compliant behavior either.

The best approach seems to be to just redirect any broken requests when they’re encountered.